In this post I will share the code to summarize a news article using Python's Natural Language Toolkit (NLTK)

For this example I'll be extracting an article from The Hindu using BeautifulSoup and summarize the article using word frequency distribution.

import requests

from bs4 import BeautifulSoup

from nltk.tokenize import sent_tokenize, word_tokenize

from nltk.corpus import stopwords

from string import punctuation

import re

article_url = 'https://www.thehindu.com/opinion/editorial/purifying-water-the-hindu-editorial-on-draft-notification-on-ro-systems/article30745293.ece'

# Extracting the content from html page

def get_article_text(url):

page = requests.get(article_url)

soup = BeautifulSoup(page.text, features='lxml')

content = soup.find('div', id=re.compile('content-body'))

return ' '.join([p.text for p in content.find_all('p')])

content_text = get_article_text(article_url)

# summarize the article with 'n' number of sentences

def summarize(content_text, n):

sent_tokens = sent_tokenize(content_text)

word_tokens = word_tokenize(content_text)

_stop_words = set(stopwords.words('english') + list(punctuation))

word_tokens_wo_stopwords = [word for word in word_tokens if word not in _stop_words]

# Frequency distribution

from nltk.probability import FreqDist

from heapq import nlargest

from collections import defaultdict

freq = FreqDist(word_tokens_wo_stopwords)

nlargest(10, freq, key=freq.get)

ranking = defaultdict(int)

for i, sent in enumerate(sent_tokens):

for w in word_tokenize(sent.lower()):

if w in freq:

ranking[i] += freq[w]

sent_idx = nlargest(n, ranking, key=ranking.get)

return [sent_tokens[j] for j in sorted(sent_idx)]

print(' '.join(summarize(content_text, 3)))

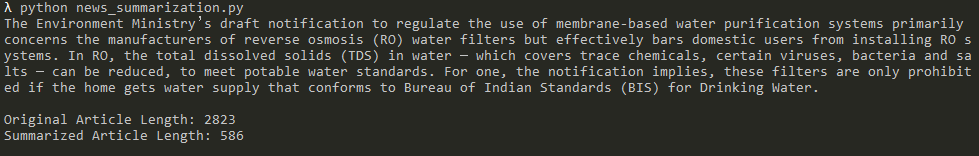

Final output looks like this;

Hope this helps!